IIRF Online > Development > Data Science > Natural Language Processing > Natural Language Processing: NLP With Transformers in Python

Natural Language Processing: NLP With Transformers in Python by Udemy

Learn next-generation NLP with transformers for sentiment analysis, Q&A, similarity search, NER, and more

Course Highlights

- Industry standard NLP using transformer models

- Build full-stack question-answering transformer models

- Perform sentiment analysis with transformers models in PyTorch and TensorFlow

- Advanced search technologies like Elasticsearch and Facebook AI Similarity Search (FAISS)

- Create fine-tuned transformers models for specialized use-cases

- Measure performance of language models using advanced metrics like ROUGE

- Vector building techniques like BM25 or dense passage retrievers (DPR)

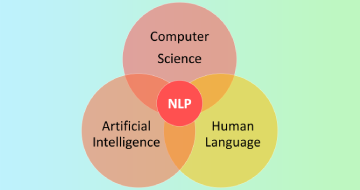

- An overview of recent developments in NLP

- Understand attention and other key components of transformers

- Learn about key transformers models such as BERT

- Preprocess text data for NLP

- Named entity recognition (NER) using spaCy and transformers

- Fine-tune language classification models

Skills you will learn!

Curriculum

8 Topics

Introduction

Course Overview

Hello! and Further Resources

Environment Setup

Alternative Local Setup

Alternative Colab Setup

CUDA Setup

Apple Silicon Setup

10 Topics

The Three Eras of AI

Pros and Cons of Neural AI

Word Vectors

Recurrent Neural Networks

Long Short-Term Memory

Encoder-Decoder Attention

Self-Attention

Multi-head Attention

Positional Encoding

Transformer Heads

9 Topics

Stopwords

Tokens Introduction

Model-Specific Special Tokens

Stemming

Lemmatization

Unicode Normalization - Canonical and Compatibility Equivalence

Unicode Normalization - Composition and Decomposition

Unicode Normalization - NFD and NFC

Unicode Normalization - NFKD and NFKC

6 Topics

Attention Introduction

Alignment With Dot-Product

Dot-Product Attention

Self Attention

Bidirectional Attention

Multi-head and Scaled Dot-Product Attention

5 Topics

Introduction to Sentiment Analysis

Prebuilt Flair Models

Introduction to Sentiment Models With Transformers

Tokenization And Special Tokens For BERT

Making Predictions

7 Topics

Project Overview

Getting the Data (Kaggle API)

Preprocessing

Building a Dataset

Dataset Shuffle Batch Split and Save

Build and Save

Loading and Prediction

2 Topics

Classification of Long Text Using Windows

Window Method in PyTorch

10 Topics

Introduction to spaCy

Extracting Entities

NER Walkthrough

Authenticating With The Reddit API

Pulling Data With The Reddit API

Extracting ORGs From Reddit Data

Getting Entity Frequency

Entity Blacklist

NER With Sentiment

NER With roBERTa

7 Topics

Open Domain and Reading Comprehension

Retrievers Readers and Generators

Intro to SQuAD 2.0

Processing SQuAD Training Data

(Optional) Processing SQuAD Training Data with Match-Case

Processing SQuAD Dev Data

Our First Q&A Model

6 Topics

Q&A Performance With Exact Match (EM)

Introducing the ROUGE Metric

ROUGE in Python

Applying ROUGE to Q&A

Recall Precision and F1

Longest Common Subsequence (LCS)

14 Topics

Intro to Retriever-Reader and Haystack

What is Elasticsearch?

Elasticsearch Setup (Windows)

Elasticsearch Setup (Linux)

Elasticsearch in Haystack

Sparse Retrievers

Cleaning the Index

Implementing a BM25 Retriever

What is FAISS?

Further Materials for Faiss

FAISS in Haystack

What is DPR?

The DPR Architecture

Retriever-Reader Stack

3 Topics

ODQA Stack Structure

Creating the Database

Building the Haystack Pipeline

6 Topics

Introduction to Similarity

Extracting The Last Hidden State Tensor

Sentence Vectors With Mean Pooling

Using Cosine Similarity

Similarity With Sentence-Transformers

Further Learning

15 Topics

Visual Guide to BERT Pretraining

Introduction to BERT For Pretraining Code

BERT Pretraining - Masked-Language Modeling (MLM)

BERT Pretraining - Next Sentence Prediction (NSP)

The Logic of MLM

Pre-training with MLM - Data Preparation

Pre-training with MLM - Training

Pre-training with MLM - Training with Trainer

The Logic of NSP

Pre-training with NSP - Data Preparation

Pre-training with NSP - DataLoader

Setup the NSP Pre-training Training Loop

The Logic of MLM and NSP

Pre-training with MLM and NSP - Data Preparation

Setup DataLoader and Model Pre-training For MLM and NSP

Natural Language Processing: NLP With Transformers in Python